Meet AEL Studio, AI Agents your way - simple and secure

Run OpenAI API On Your Hardware

Create AI Agents in minutes without tech knowledge. Complete OpenAI API compatibility with open source models. Deploy anywhere, own your infrastructure, develop custom AI agents - all with open source software.

Data sharing

Infrastructure

MIT Licensed

OpenAI API Compatible

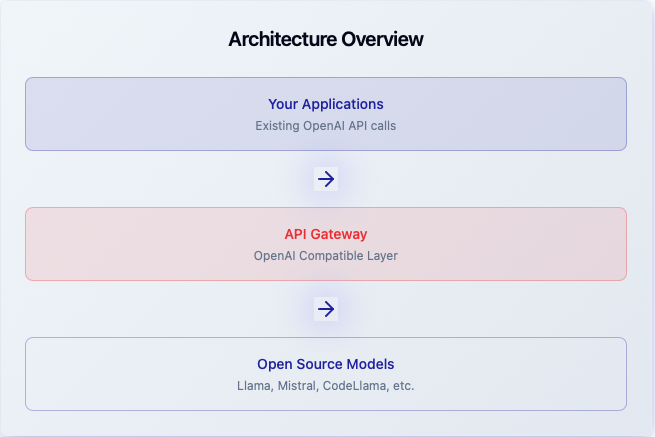

Simple architecture

Get up and running in minutes with our battle-tested architecture. Designed for enterprise scale and developer simplicity.

1 Deploy Framework. Install our lightweight framework on your infrastructure using Docker, Kubernetes, or bare metal.

2 Load Models. Choose from dozens of open source models or bring your own. Automatic optimization for your hardware.

3 Configure API. Set up authentication, rate limiting, and monitoring. Compatible with existing OpenAI integrations.

4 Build Agents. Develop custom AI agents using our SDK. Deploy and scale across your infrastructure.